"Borrowed Limbs" is an experimental short film entirely produced with generative Machine Learning techniques. The making of this short film has been an exciting process including some challenges. In the following interview Lisa-Marleen Mantel and Laura Wagner give insight into the process of creating and producing it.

What makes your work outstanding, unique and special?

In this work posthuman approaches have been incorporated into a speculative design concept adapted from technological developments in cognitive science and artificial intelligence. It addresses the critique of transhumanist attempts of creating the superhuman and the continuation or amplification of the mind-body problem. Influenced by posthumanist thinkers it assumes a speculative future in which humans live in a symbiotic, hybrid existence with other species such as AI. In contrast to focusing on perfecting and increasing the efficiency of the human species, the speculative concept developed during the research focuses on a future AI that utilizes the human body to gain an embodied understanding of its environment. Transhuman concepts in which the human body is technologically enhanced are appropriated and inverted, and the human body itself becomes an enhancement in the role of a sensing device.

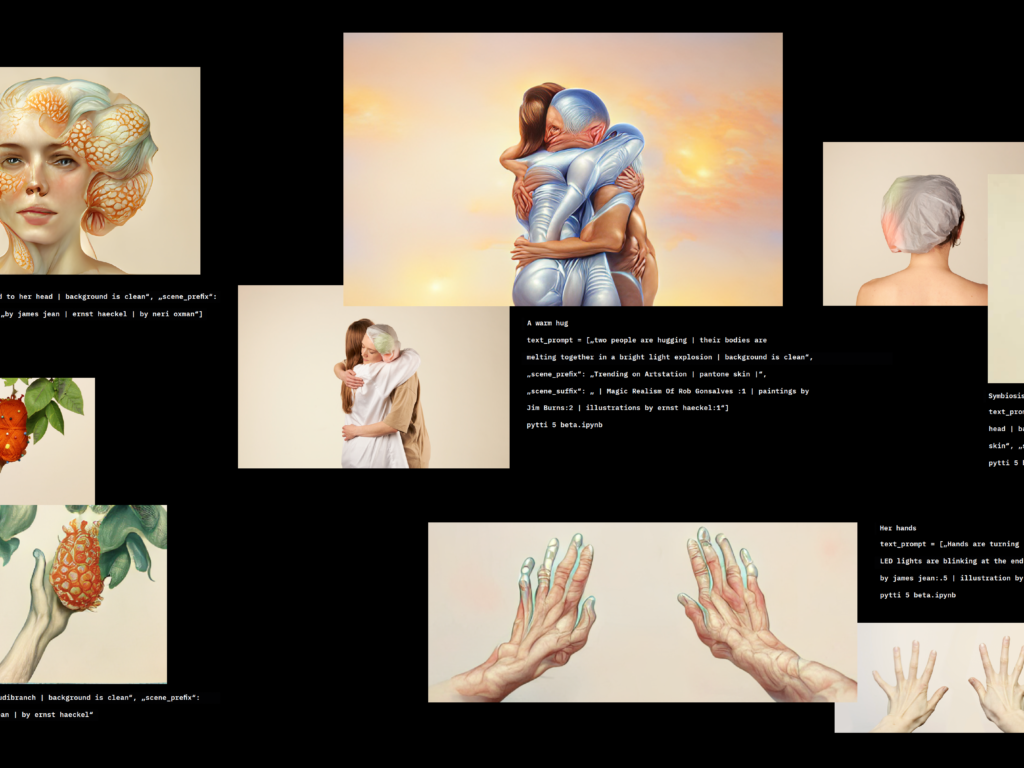

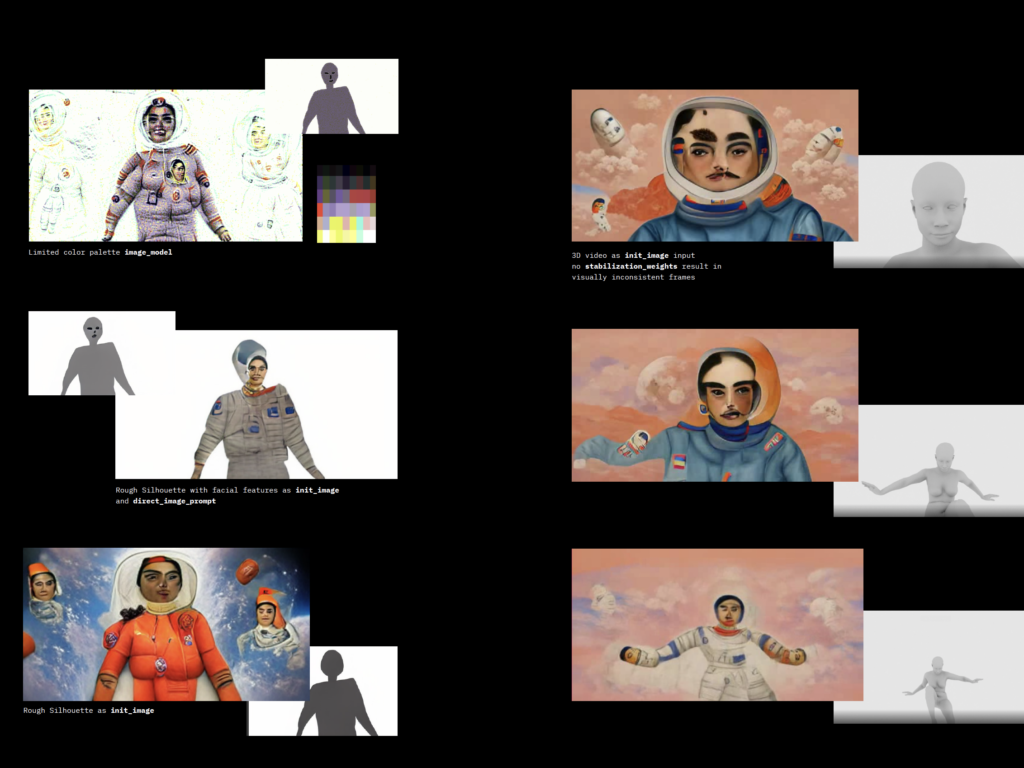

Special about the creation process was the fact that we appropriated the very disruptive technologies that our theoretical concepts dealt with and used them as design tools for producing “Borrowed Limbs”. In our collaborative design workflow with AI tools we ourselves performed as the symbiotic, embodied link we describe in our speculative plot. Our organic bodies, capable of multimodal interaction, enhanced the image synthesization with context and subjective meaning. A constant machine-human dialogue arose: Prompting the machine, evaluating the result, and refining the following prompt to get the anticipated result. While most of the tools were released to the public less than a year ago, the workflow was based on experimentation and flexibility. But with knowing the vocabulary and the proper emphasis, it is like having learned a new language. This language opens up a new way of creating visuals for speculative concepts. Rough sketches, raw photography, quick 3D renderings with curated settings can emerge into a coherent, visual language. These experimental approaches might seem rough in their origin and describe a workflow far off the glossy and polished approaches often seen in futuristic visuals. They testify to the joy of experimentation and use of fundamental design principles of shapes, textures, and composition combined with the appropriation of new technologies.

What would be the next steps now for this work body and for your career as integrated design masters?

A part of the future role of designers will be to understand their profession as a discipline of thought experiments. When technological progress is accelerating, and the possibilities of appropriation are given, it is even more important to draw attention to the possible dangers of future developments. Since the tools we use as human beings are shaping our bodies and mindset of tomorrow we feel it is important to share our insights and the potential dangers with other creatives and digital creators. We are planning a series of workshops in which we would like to give an introduction to the design pipeline we have developed. Content will be the creative workflow where the machine is the creator and the human the curator. But also, and more importantly, a critical look at the foundation these technologies are built on.

Let’s do some foresight: Where do you see AI development in the context of audio-visual aesthetical production processes going right now?

Repetitive tasks have always been automated with technology, which is not new – but with AI, designers are gaining a tool that can be used to co-create in a drastically new way. A promising approach would be to view AI as a collaborator rather than a competitor, a colleague of a new species. Designers will be able to outsource tedious tasks and engage with machines collaboratively, generating and curating from an infinite latent space of possible designs. As a machine whisperer, the designer will enter a constant dialogue in setting the goals, parameters, and constraints before reviewing and curating the results. Concluding, adjusting, and repeating a generative process before the result can be manually refined will become an important task. AI enables many industries to move away from the prerogative of a one size fits all standardization approach for their products. A new approach to radical personalization is emergent. This could open up the possibility for designers to develop entirely new types of experiences. Concept visualization in character and product design, image-diffusion based text-prompt brushes in software like Photoshop, AI texture packs, Realtime AI rendering in an upcoming generation of video games – these developments will change the way designers work.

For the creation of our short film we used the open-source version of DALL·E. After OpenAI released some of the code of DALL·E last year, many hobbyists set out to recreate it. Results of that are PyTTI and VQGAN + CLIP. With DALL·E 2 being released a few weeks ago it becomes apparent that rapid, market-driven implementation and industrial agendas are the main focus. The supposed improvements are based on the proximity to photorealism. These developments are also influential on the aesthetics and the presumed creative freedom these tools promise.

Which specific topics and aspects of the Machine Learning realm would be crucial to integrate in the Integrated Design program or Code & Context program in near future? And why?

Exploring AI tools in a designerly way leads to an exploration of a component of AI frameworks from which behaviors emerge: Training data. Most datasets are web scraped – programmatically copied from the internet, and thus they inherit and carry forward biases from their birthplace. (The DALL·E 2 tests by Arthur Holland Michel visualize this fact very figuratively.) This dilemma is exacerbated by the fact that AI is introducing artificial content into the vast corpus of our collective representation of online knowledge, the internet. Some researchers and developers involved in AI technologies follow the dictum that AI can overcome all its limitations if fed with enough raw data; no or little effort is needed to make the AI system more sophisticated. Data omission and simplification for the sake of computational feasibility introduce and amplify bias even more. Once a model is trained with biased data, it serves as a multiplier. Machine Learning models trained today will inadvertently determine what future content will look like, as they continuously add new data to our collective internet knowledge, which in turn will be used to train new models.

Foreseeing the developments and integration of those tools in the industry it is crucial for designers to understand what data the tools they are using are built upon. This way misusage and discriminating results can be altered during the creation process.

For more information please visit Borrowed Limbs. An experimental short film.

_________________

Exhibitions and Screenings

Credits

Narrated, curated and composed by Lisa-Marleen Mantel and Laura Wagner.

In collaboration with Discodiffusion, PyTTI and StyleGAN synthesized dreams.

Starring Mayssa Kaddoura as the voice of an advanced AI and Bessie Normand as the body of a human sensing device. Music “No more light” by Jimmy Svensson.

Master of Integrated Design at Köln International School of Design (KISD), part of TH Köln – University of Applied Sciences.

Supervised by Prof. Nina Juric, Prof. Dr. Laura Popplow and Prof. Dr. Lasse Scherffig.

Published 31st January 2022.

Keywords

designerly appropriation of AI tools, machine creator – human curator, generative machine learning, world to latent space, synthetic image, decentering the human, posthuman, embodiment

Software

PyTTi5-beta notebook (access for patrons of sportsracer48), Recovery DiscoDiffusion v4 Preview Alpha w/ Zooming and Chigozienri keyframes (both Colab notebooks are built upon the original notebook of Katherine Crowson), StyleGAN3, StyleGAN2-ada-Pytorch, RIFE, realESRGAN, Topaz Labs, Blender, Adobe Premiere, Adobe After Effects, Adobe Audition

Hardware

GPU NVIDIA P100 (through Google Colab) NVIDIA RTX 3070 (StyleGAN3 training and inference) NVIDIA RTX 2070 (StyleGAN3 training and inference)

_________________

The film:

All videos on Vimeo

Please also view www.stateofthedeep.art